Reflections on APMdigest’s Observability Series — and Where We Go Next

Whether we call it APM or observability is bikeshedding. What really matters is ensuring systems deliver the service levels users expect. That’s where AI comes in.

Over the summer, APMdigest published a fantastic 12-part series on APM and observability, bringing together dozens of voices from across our industry. First things first: a huge thank you to Pete Goldin and the APMdigest team for pulling it together. If you haven’t read it yet, I highly recommend it. It’s one of the best ways to see how practitioners, vendors, and thought leaders are thinking about the future of monitoring and reliability.

Two topics stood out most in the series. The first is the ongoing wrestling match between the terms Application Performance Management (APM) and observability. The second is how AI will change this space over the coming years. Let’s take them one by one.

APM vs. Observability — The Bikeshedding Debate

When we talk about observability, it’s important to distinguish between the technical definition and the marketing definition. In control theory, observability means “a measure of how well internal states of a system can be inferred from knowledge of its external outputs” (Wikipedia). We borrowed that definition from control theory into the domain of software, where those “internal states” are the real things that are happening invisible to us: CPU cycles, memory consumption, packet flows, the actual bits moving through our systems. The “outputs” are what we measure: traces, metrics, logs, profiles, and ultimately what the end user sees.

It’s a good definition because it clearly states how we can make a system objectively more observable: by providing increasingly better external outputs that describe the internal states as closely as possible, until in theory one could determine the entire system’s behavior from its outputs. This is how engineers think, which is why it resonates so well with us.

Furthermore, we can see this approach in action, as open projects and standards like Prometheus and OpenTelemetry evolve, adding new signals, more domains, and even semantic conventions to steadily improve what can be inferred from telemetry. Observability in the technical sense is just about making more of the system’s inner state visible.

But then there’s the marketing use of the term “observability,” particularly in how vendors brand and sell their offerings as “observability platforms.” Look under the hood and you’ll see evolution, not revolution. Many features touted as “new” under the observability banner — distributed tracing, combined app and infra visibility, end-user monitoring, business-centric insights — were already present in APM solutions long before.

APM, traditionally defined as the discipline of monitoring and managing the performance and availability of software applications, had long offered these capabilities — the key difference is that they were locked inside vendor walled gardens, fragmented across products, and often poorly implemented. Observability didn’t invent them; it generalized them and, crucially, opened them up.

That’s the real accomplishment of observability, in its marketing sense: expanding a space once dominated by closed APM vendors, breaking down their walls, and making telemetry collection a commodity.

Beyond that, does it really matter whether we call it APM or observability? The debate may have been useful to move the industry forward, but today it’s a distraction and a waste of energy; it’s bikeshedding. Use the terms however they suit you — what really matters is ensuring systems consistently deliver the service levels our users expect in the years ahead. And that’s where AI comes in.

AI in Observability — Hype, Reality, and What’s Missing

The APMdigest series also collected a wide range of AI predictions. Many of them, we at Causely agree with wholeheartedly. Machine learning is already reducing alert fatigue, clustering related events, and detecting anomalies faster than humans. LLMs are making telemetry more accessible in many languages, so engineers can query systems in their own tongue without having to learn domain-specific query languages. These are real wins.

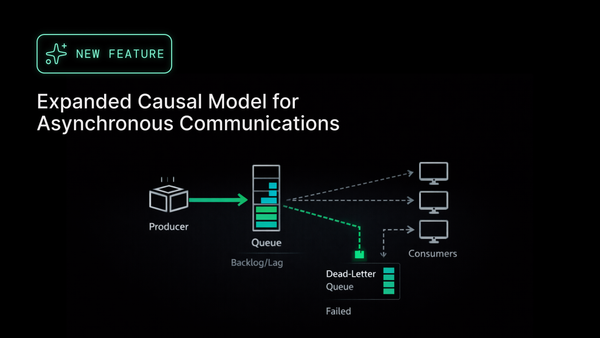

Where we diverge is in how far LLMs can take us. At their core, LLMs are pattern-matching machines. They can generate plausible explanations, but they don’t actually understand causality. That’s a critical limitation when the task is diagnosing why a system failed. Correlation is not causation — and if you’ve been on-call at 3 a.m., you know how costly the wrong guess can be.

LLMs are also inherently reactive. If I have to ask the chatbot why something is broken, it’s already too late. They’re not designed to proactively detect precursors of failure, to flag the subtle changes that precede an outage. That requires something different: causal reasoning.

Causal reasoning is what turns noise into signal. It builds an explicit model of how services depend on each other and how failures propagate. That model acts as a pre-processor layer: it distills raw telemetry into precise insights about what is really going on. Once you have that, LLMs can actually shine. They can take the output of causal analysis and do what they do best — generate natural-language explanations, propose remediations, even open pull requests to apply safe fixes.

In other words, causal reasoning is the missing layer between raw observability data and generative AI. Without it, we risk drowning in correlations. With it, we unlock the path to truly autonomous reliability. We’ve written more about this in InfoQ, on our own blog, and in industry pieces like CIO Dive if you want to dive deeper.

Closing Thoughts

The APM vs. observability debate may make for lively discussion, but the more interesting question is how AI will actually change the way we run systems. At Causely, we agree with much of the optimism in the APMdigest series, but we believe the crucial next step is to go beyond correlation and embrace causal reasoning. That’s how we move from data to control, from dashboards to autonomous systems.

It’s a privilege to be part of a community that debates these questions so openly. We’re grateful to APMdigest for hosting the series, and we look forward to future editions. In the meantime, we’d love to hear your perspective — drop us a note at community@causely.ai or join the conversation with us on social media.